Suing TikTok Won’t Solve Our Social Media Problems

As I write this newsletter, my 8-year-old is watching YouTube, alternating between PrestonPlayz, uh, playing video games and MrBeast giving away money. (Cut a working mom a break, man.) Gen X parents like me might not understand the appeal such content has for kids, but it’s made creators very, very rich.

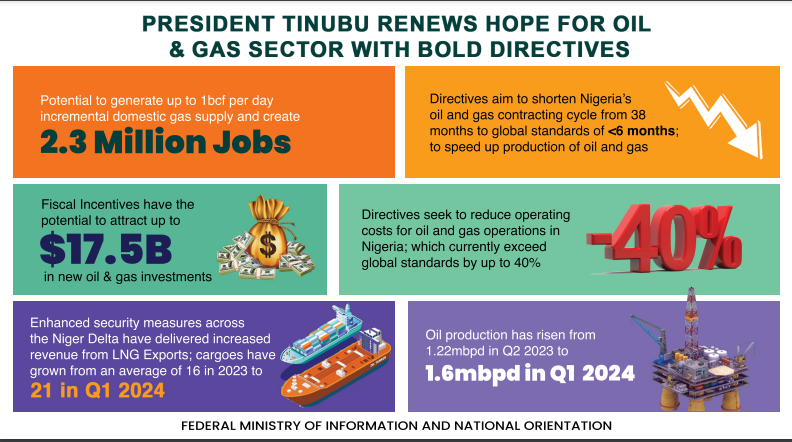

Kids these days are, despite even the best parents’ efforts, growing up on social media, with some developing unhealthy viewing habits on Instagram, TikTok and YouTube. Lest you think you’re immune to becoming addicted, let this chart on adult TikTok usage disabuse you of such a notion:

While those views clearly skew young, growth is being logged across all age groups, as Pew Research Center data show. That’s a huge trend that is surely only going to continue as Facebook and X users stop using those platforms to get their news. Other platforms — LinkedIn, Twitch, Reddit, Nextdoor and whatever we come up with next — are going to want to capitalize in any way they can.

Social media companies “want your attention; they work to keep it. That’s quintessential publishing behavior,” writes Stephen L. Carter. Enter the lawyers: Hundreds of lawsuits have been filed by parents, organizations and state attorneys general against those companies, alleging that social media gets young viewers addicted, which damages their health. A judge tossed a large chunk of those suits. “Not every problem can be fixed by filing the right lawsuit,” Carter continues. “Judge Rogers is correct in her view that Section 230 protects social media platforms when they act like publishers.”

But do social media companies bear some responsibility for keeping children and teens away from the worst the digital world tries to give them? How would that even work, balancing privacy concerns with parents’ right to protect their kids? “Options are not lacking to protect children — we just need to recognize the right ones when we see them,” Dave Lee writes. Meta (!) has called for a federal regulation to add an age verification process to popular app stores, arguing for Apple and Google to always obtain a parent’s approval whenever a child younger than 16 tries to download an app from their stores. “When one obvious step can be taken — one with, as far as I can see, no negative consequences — we shouldn’t hesitate to take it,” Lee says.

That still leaves us with a worsening mental health crisis. “Social media certainly plays a role in kids’ deteriorating emotional state,” Lisa Jarvis writes. (Not that adults are doing so hot these days, either, as Niall Ferguson reminded us.) “But so, perhaps, do our well-meaning attempts to cocoon kids from harm.” Kids are going to make mistakes … and we, as adults, know that’s how you learn and develop independence. “Giving kids space often defies our parental instincts — particularly our sense that more information about our kids’ inner lives is always better,” Lisa says. “But as they grow up, constantly supervising them isn’t a solution, either.”

I’m not prying my kid away from his (monitored, I promise) YouTube anytime soon. The two of us watch cat videos on TikTok together, a modern-day bonding experience. So if parents and social media companies can maybe work together to set some reasonable, constitutionally acceptable boundaries, we can keep the bloodthirstiest of the digital wolves at bay. For the kids, anyway.

Bloomberg

Comments are closed.